The article can be read at source here

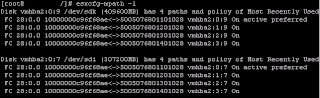

this shows that vmhba2 is currently active and has 4-paths to the SAN

this shows that vmhba2 is currently active and has 4-paths to the SAN

The -q option shows configured options for a module.

The -q option shows configured options for a module.The VMware Infrastructure Remote CLI provides a command-line interface for datacenter management from a remote server. This interface is fully supported on VMware ESXi 3.5 and experimental for VMware ESX 3.5. Storage VMotion is a feature that lets you migrate a virtual machine from one datastore to another. It is used by executing the svmotion command from the Remote CLI. The svmotion command, unlike other RCLI commands, is fully supported for VMware ESX 3.5.

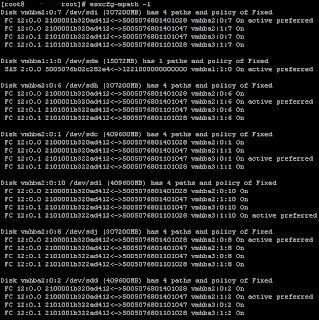

As you can see the path policy is currently set to mru, most recently used path policy is best used in an active/passive configuration.

As you can see the path policy is currently set to mru, most recently used path policy is best used in an active/passive configuration.

Any help to indentify would be great!

Any help to indentify would be great!

Put your finger over any individual constituent part, i.e., pNic, interface, bay switch or core switch, to simulate a failure and there will always be an alternative path.

Put your finger over any individual constituent part, i.e., pNic, interface, bay switch or core switch, to simulate a failure and there will always be an alternative path.

Since only 4 interfaces are available, teaming and VLANs will have to be used to provide resilience and to separate the SC and VMKernel networks.

Since only 4 interfaces are available, teaming and VLANs will have to be used to provide resilience and to separate the SC and VMKernel networks.

Interface is the network adapter inside a blade, Location is where the interface is, Chassis Bay is where the interface terminates at the rear of the BladeCenter chassis, pSwitch is the external core switch that the Chassis Bay uplinks to, vSwitch is the ESX virtual switch that the Interface provides an uplink for, vLAN is the ID that is assigned to each Port Group and Service is the type of port group assigned to a vSwitch.

Interface is the network adapter inside a blade, Location is where the interface is, Chassis Bay is where the interface terminates at the rear of the BladeCenter chassis, pSwitch is the external core switch that the Chassis Bay uplinks to, vSwitch is the ESX virtual switch that the Interface provides an uplink for, vLAN is the ID that is assigned to each Port Group and Service is the type of port group assigned to a vSwitch.Disabling the VMware DHCP Service on the Host Computer.

It is easy enough to do this on Windows hosts, this article focuses on Linux hosts.

Follow the steps shown below for your host operating system.

Linux for Workstation 5.x and VMware Server 1.x

Linux for Workstation 6

Occasionally you may want to check the state of a virtual machine, to check whether it is running or not. On the very few times that VMotion failed for one reason or another, a VM will fail to resume on the source host or start on the destination host.

From the Service Console you can check the state of running machines by typing vmware-cmd /

1. Click Start | Run | cmd

2. At a command prompt, type the following command , and then press ENTER:

set devmgr_show_nonpresent_devices=1

3. Type the following command in the same command prompt window, and then press ENTER:

start devmgmt.msc

4. Click Show hidden devices on the View menu in Device Managers before you can see devices that are not connected to the computer.