The reason that Link Aggregation will not give any performance benefit if both the Service Console and the VMkernel share two active uplinks on a virtual switch configured with Route based on IP hash load balancing policy can be summarised as follows.

802.3ad/LACP aggregates physical links, but the mechanisms used to determine whether a given flow of information follows one link or another are critical.

You’ll note several key things in this document that are useful in understanding 802.3ad/LACP.

- All frames associated with a given “conversation” are transmitted on the same link to prevent mis-ordering of frames. So what is a “conversation”? A “conversation” is the TCP connection.

- The link selection for a conversation is usually done by doing a hash on the MAC addresses (Route based on source MAC hash) or IP address (Route based on IP hash).

- Link Aggregation achieves high utilisation across multiple links when carrying multiple conversations, and is less efficient with a small number of conversations (and has no improved bandwith with just one).

So how does the VMkernel distribute traffic over the Link Aggregation Group (LAG)?

The VMkernel distributes the load across the Link Aggregation Group by selecting an uplink to the physical network based on the source and destination IP addresses together. Each source / destination IP conversation gets treated as a unique route, and is distributed across the LAG accordingly. Using an IP-based load balancing method allows for a single-NIC virtual machine to possibly utilise more than 1 physical NIC. Returning traffic may come in on a different NIC, so Link Aggregation must be supported on the physical switch.

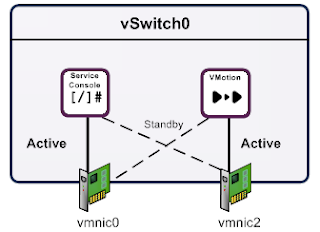

The diagram below shows what you would want to achieve with vSwitch0 when using a single virtual switch with two network interfaces with two port groups configured for the Service Console networking and the VMkernel networking.

The Service Console uses vmnic0 as the active uplink and vmnic2 as the passive uplink. The VMkernel uses vmnic2 as the active uplink and vmnic0 as the passive uplink. If one of the network interfaces fail or the corresponding physical switch fails or a cable fails, then the portgroup will utilise the standby network interface.

The configuration settings seen within the VI Client are shown in the figures below.

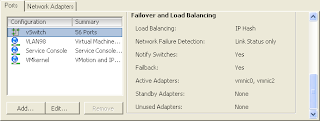

The vSwitch0 configuration

The vSwitch uses Route based on IP hash with both adapters set as active.

The vSwitch uses Route based on IP hash with both adapters set as active.The Service Conso

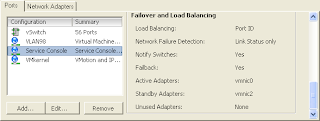

le portgroup configuration

le portgroup configurationThe Service Console port group uses vmnic0 as the active adapter and vmnic2 as the standby adapter and the load balancing policy is set to Route based on virtual port ID. This overrides the configuration inherited by the vSwitch.

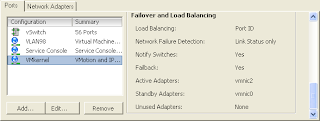

The VMkernel portgroup configuration

The VMkernel port group uses vmnic2 as the active adapter and vmnic0 as the standby adapter and the load balancing policy is set to Route based on virtual port ID. This overrides the configuration inherited by the vSwitch.

The VMkernel port group uses vmnic2 as the active adapter and vmnic0 as the standby adapter and the load balancing policy is set to Route based on virtual port ID. This overrides the configuration inherited by the vSwitch.Network Utilisation

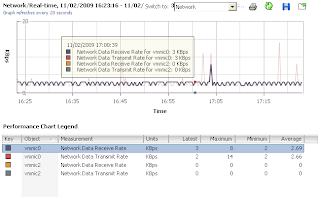

The graph below shows the current utilisation of the network interfaces assigned to vSwitch0, under normal operations only vmnic0 is used because the Service Console traffic uses vmnic0.

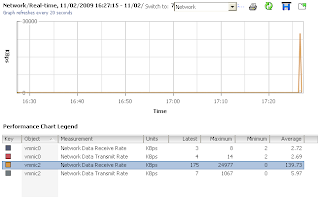

Let's see what happens when I initiate a VMotion to the same server.

The VMotion traffic uses the active network interface vmnic2 as expected.

It is obviously apparent that using override active/passive uplinks for the Service Console and VMkernel port groups has significant advantages. By doing this we restrict the VMotion traffic from flooding the Service Console uplink adapter that can be experienced when using an active/active configuration.